Lawmakers wrestle with congressional use of AI amid rising risk of deepfakes

WASHINGTON — As deepfake scandals continue to rock both politics and pop culture, House and Senate lawmakers are grappling with the risks of rapidly advancing technology, while also trying to capitalize on the benefits of artificial intelligence for their own internal use.

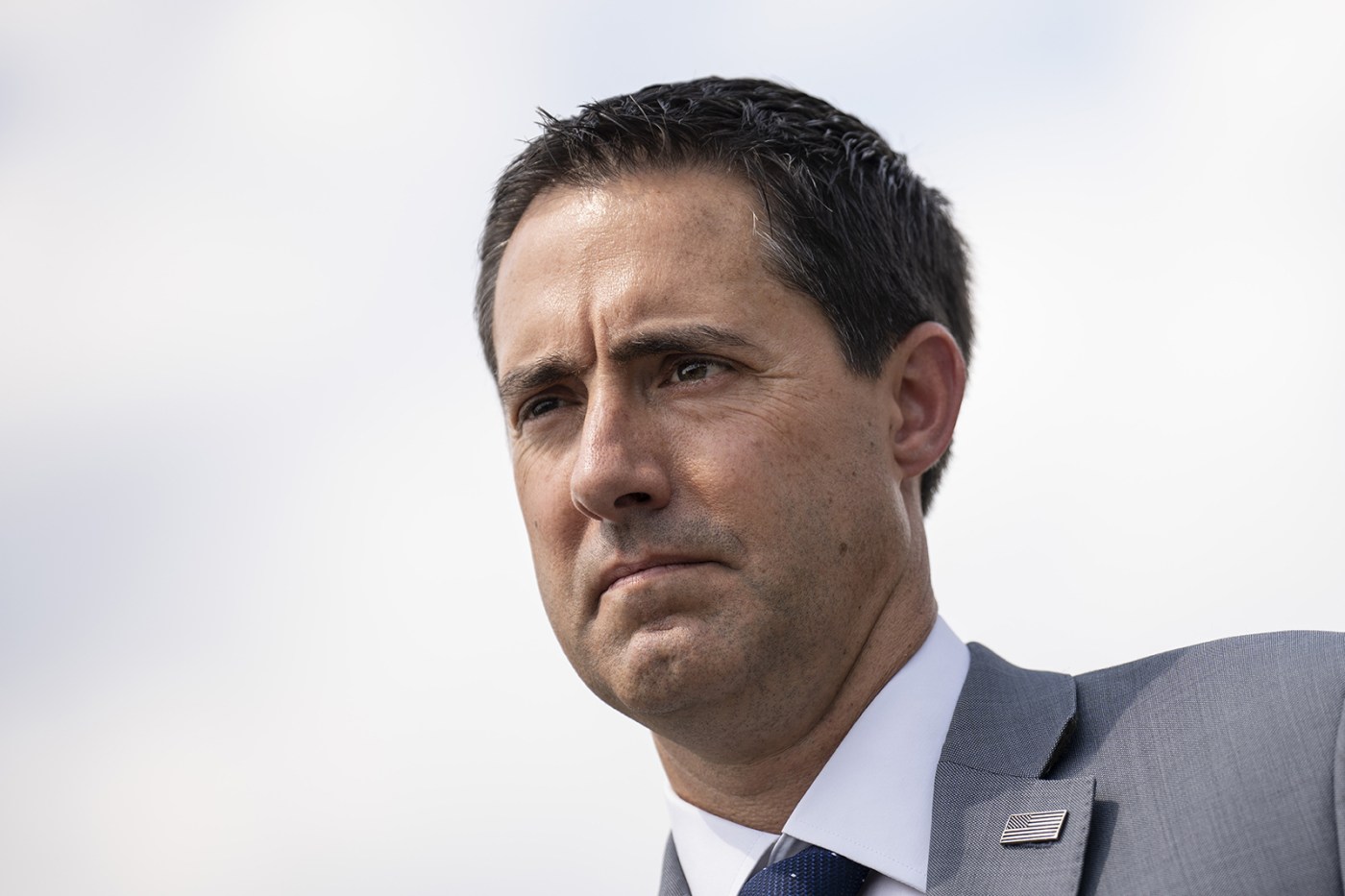

At a Tuesday hearing on the use of AI in the legislative branch, House Administration Chair Bryan Steil and ranking member Joseph D. Morelle each referenced the fake robocall purportedly from President Joe Biden that instructed voters to stay home during New Hampshire’s presidential primary. And Morelle mentioned the AI-generated lewd images of pop star Taylor Swift that recently circulated online as another example of tech run amok.

Congress should take note, Morelle said.

“Threats and risks exist here in the legislative branch, too. And we need to be mindful of them as we establish what will be the operating culture for congressional AI use for years to come,” Morelle said.

If holding congressional hearings counts as being mindful, his colleagues are heeding the call.

Last week, the Senate Rules and Administration Committee hosted a similar meeting on the use of AI at the Library of Congress, Smithsonian Institution and the Government Publishing Office. A Senate Judiciary subpanel hosted a hearing the same day on the use of AI in criminal investigations. And on Monday, a House subpanel looked at the future of data privacy and AI at the Department of Veterans Affairs.

“This seems to be a very interesting topic because I don’t often see this room packed full of visitors,” joked Oklahoma Republican Rep. Stephanie Bice on Tuesday, looking around the Longworth Building committee room.

The crowd heard testimony from representatives from GPO, the Library of Congress, the Office of the Chief Administrative Officer and the Government Accountability Office, who all described uses for the technology they said could empower staffers, democratize government information and make Congress more efficient.

Hugh Halpern, director of GPO, said AI could be helpful for quality control purposes in manufacturing the country’s passports.

And John Clocker, deputy chief administrative officer, said large language models like ChatGPT Plus — which was approved for limited House use in June 2023 — are useful tools for proofreading and effective at “producing first drafts of memos, press releases, letters, summarizing reports, transcripts and large datasets.”

AI is also being used in experiments with optical character recognition to assist visually impaired patrons at the Library of Congress, to improve digital accessibility to copyright records and to help summarize legislation, Steil said.

Bice’s Modernization Subcommittee, which she noted will be hosting AI-related hearings later this year, had published a series of flash reports through the fall and winter. Those reports cataloged the various uses of AI throughout the legislative branch, including the ways it could benefit members, staff and the public.

But legislative branch support agencies are also wary of the risks and scrambling to establish processes and procedures for the responsible use of AI.

According to Clocker, the CAO created a House-wide advisory group to help offices maximize their use of AI technology and conducted a study of the House’s AI governance policies. That assessment found the House needed additional generative AI policies, user guidance and training, according to Clocker.

“AI is new to many of us, making training a critical element for successful implementation,” Steil said. “I was once told that AI won’t replace humans, but humans that use AI could replace those who aren’t using AI.”

In addition to drafting internal guidelines, lawmakers have introduced a flurry of bills as they weigh how to address emerging AI-related problems.

Morelle recently reintroduced a bill that would make the distribution of deepfake pornography a federal crime.

Minnesota Democratic Sen. Amy Klobuchar, who chairs the Senate Rules and Administration Committee, in November joined a bill introduced by South Dakota Republican Sen. John Thune that seeks to provide a framework for AI innovation and accountability. And in May of last year she introduced a bill that would require AI-generated political advertisements to carry a disclaimer.

“AI has the potential, as we know, to lead to incredible innovation by supercharging scientific research, improving access to information and increasing productivity,” Klobuchar said at last week’s Rules hearing. “But, like any emerging technology, AI comes with significant risks, and our laws need to be as sophisticated as the potential threats … to our own democracy.”

_____

©2024 CQ-Roll Call, Inc., All Rights Reserved. Visit cqrollcall.com. Distributed by Tribune Content Agency, LLC.