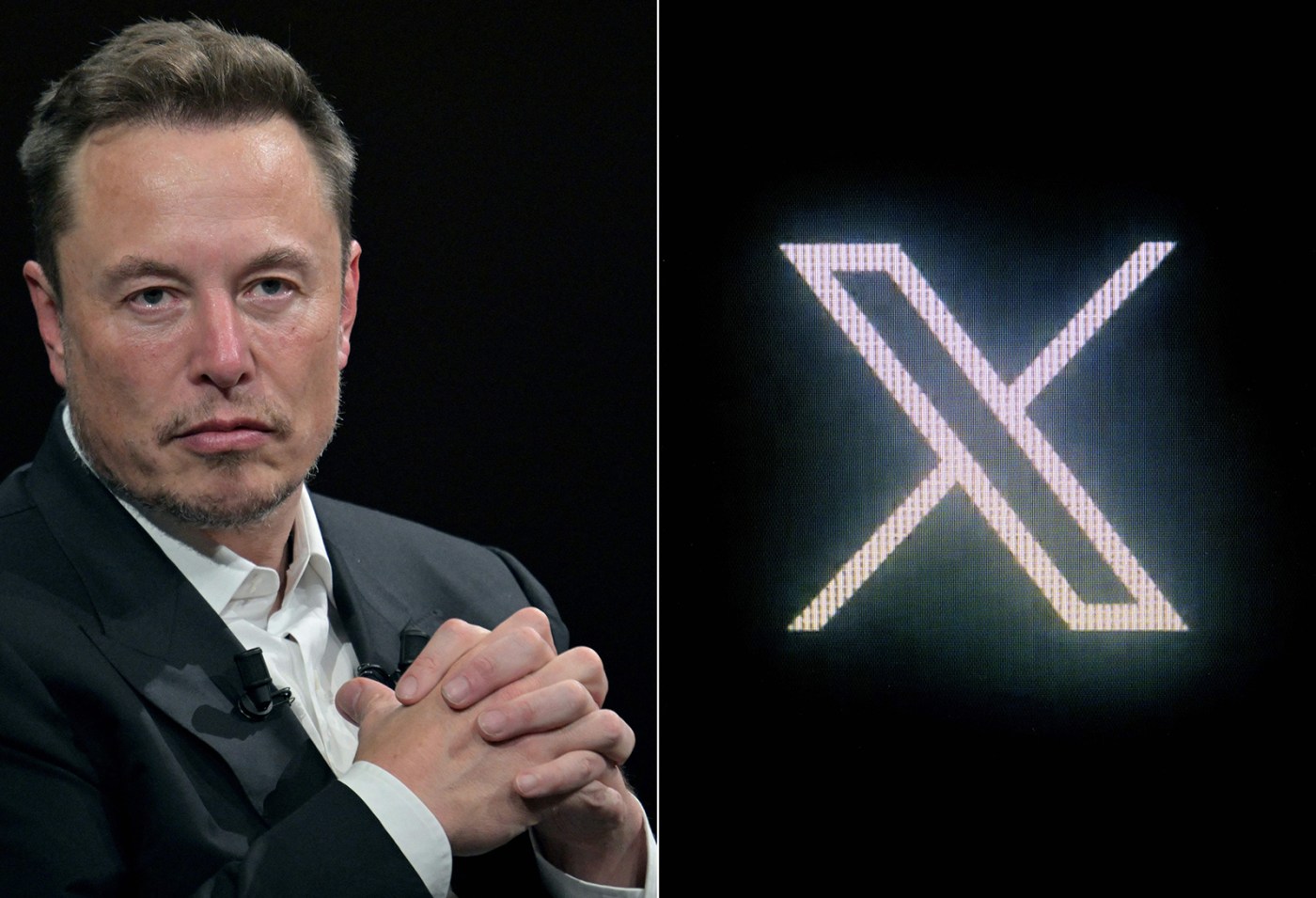

US heads into post-truth election as platforms shun arbiter role

Elon Musk’s transformation of Twitter into the more free-for-all X is the most dramatic case, but other platforms are also changing their approach to monitoring. Meta Platforms Inc. has sought to downplay news and political content on Facebook, Instagram and its new Threads app. Google’s YouTube has decided that purging falsehoods about the 2020 election restricts too much political speech (Meta has a similar policy).

The shift is happening just as artificial intelligence tools offer new and accessible ways to supercharge the spread of false content — and deepening social divisions mean there’s already less trust. In Davos, where global leaders gathered this week, the World Economic Forum ranked misinformation as the biggest short-term danger in its Global Risks Report.

While platforms still require more transparency for advertisements, organic disinformation that spreads without paid placement is a “fundamental threat to American democracy,” especially as companies reevaluate their moderation practices, says Mark Jablonowski, chief technology officer for Democratic ad-tech firm DSPolitical.

“As we head into 2024, I worry they are inviting a perfect storm of electoral confusion and interference by refusing to adequately address the proliferation of false viral moments,” Jablonowski said. “Left uncorrected they could take root in the consciousness of voters and impact eventual election outcomes.”

Risky Year

With elections in some 60 other countries besides the U.S., 2024 is a risky year to be testing the new dynamic.

The American campaign formally got under way last week as former President Donald Trump scored a big win in the Iowa caucus — a step toward the Republican nomination and a potential rematch with President Joe Biden in November. With both men viscerally unpopular in pockets of the country, that carries the risk of real-world violence, as in the Jan. 6 attack on the Capitol before Biden’s inauguration in 2021.

X, Meta and YouTube, owned by Alphabet Inc.’s Google, have policies against content inciting violence or misleading people about how to vote.

YouTube spokesperson Ivy Choi said the platform recommends authoritative news sources and the company’s “commitment to supporting the 2024 election is steadfast, and our elections-focused teams remain vigilant.”

Meta spokesperson Corey Chambliss said the company’s “integrity efforts continue to lead the industry, and with each election we incorporate the lessons we’ve learned to help stay ahead of emerging threats.” X declined to comment.

‘They’re Gone’

Still, researchers and democracy advocates see risks in how companies are approaching online moderation and election integrity efforts after major shifts in the broader tech industry.

One motive is financial. Tech firms laid off hundreds of thousands of people last year, with some leaders saying their goal was to preserve core engineering teams and shrink other groups.

Mark Zuckerberg described Meta’s more than 20,000 job cuts, a shift that’s been rewarded handsomely by investors, as the “Year of Efficiency.” He suggested that the trend to downsize non-engineering staff has been “good for the industry.”

Musk said he dismantled X’s “Election Integrity” team — “Yeah, they’re gone,” he posted — and cast doubt on the work it did in previous campaigns.

There has also been political pressure. U.S. conservatives have long argued that west-coast tech firms shouldn’t get to define the truth about sensitive political and social issues.

Republicans lambasted social media companies for suppressing a politically damaging story about Biden’s son before the 2020 vote on the grounds – now widely acknowledged to have been unfounded – that it was part of a Russian disinformation effort. Zuckerberg later said he didn’t enjoy the “trade offs” involved in acting aggressively to remove bad content while knowing there would be some overreach.

Republicans have used their House majority — and subpoena power — to investigate the academic institutions and Biden administration officials that tracked disinformation and flagged problematic content to major online platforms, in an inquiry largely focused on pandemic-era measures. Some researchers named in that probe have been harassed and threatened.

‘Uncertain Events’

The Supreme Court is reviewing a ruling by lower courts that the Biden administration violated the First Amendment by asking social media companies to crack down on misinformation about Covid-19.

It’s hard to establish the truth amid rapidly changing health guidance, and social media companies in 2020 got burned for trying, says Emerson Brooking, a senior fellow at the Atlantic Council.

“Covid probably demonstrated the limits of trying to enforce at the speed of fast-moving and uncertain events,” he says.

One effect of all these disputes has been to nudge social media companies toward less controversial subject matter – the kind of stuff that’s helped TikTok thrive.

When Meta introduced Threads last year as a competitor to Twitter, Adam Mosseri, the executive in charge, said the new platform was not intended to be a place for “politics or hard news.” He said it would aim instead for lifestyle and entertainment content that wouldn’t come with the same “scrutiny, negativity (let’s be honest), or integrity risks” as news.

‘Rapid-Fire Deepfakes’

Meta says its 2024 election plan will be similar to the protocols it had in place for previous votes, including a ban on any new political ads one week before election day. The company has roughly 40,000 people (including outside contractors) working on safety issues, up from about 35,000 in 2020, according to Chambliss, the Meta spokesperson. And there will be one new policy: a requirement for advertisers to disclose when material has been created or altered by AI.

That highlights a rising concern about deepfakes — images, audio or videos created using AI to portray things that never happened.

The Biden campaign has already assembled a team of legal experts poised to quickly challenge online disinformation, including the use of deepfakes in ways that could violate copyright law or statutes against impersonation, according to a person familiar with the plans.

Brooking, of the Atlantic Council, said there hasn’t been much evidence yet of AI-generated content having a material impact on the flow of disinformation, in part because the examples that have gotten the most attention — like a fabricated image of the pope in a puffer jacket, or false reports of an explosion at the Pentagon that briefly roiled markets — were quickly debunked. But he warned that is likely to change.

Deepfakes can “still create doubt on short timeframes where every moment is critical,” Brooking said. “So something like an election day is a time where generative AI and rapid-fire deepfakes could still have a deep impact.”

—With assistance from Daniel Zuidijk.

___

©2024 Bloomberg News. Visit at bloomberg.com. Distributed by Tribune Content Agency, LLC.